Control Layer Overview

The Doubleword Control Layer is the world's fastest AI model gateway (450x less overhead than LiteLLM). It provides a single, high-performance interface for routing, managing, and securing LLM inference across model providers, users and deployments - both open-source and proprietary.

Transform your AI infrastructure from scattered API calls to a centralized, secure, and manageable platform that scales with your organization.

Key Resources

GitHub Repo — Explore the open-source Control Layer code.

Announcement Blog — Read the launch announcement and background story.

Demo Video — Watch the Control Layer in action.

CTO Blog — Technical deep dive by Fergus Finn.

Benchmarking Writeup — Performance comparison vs alternatives.

Key Capabilities

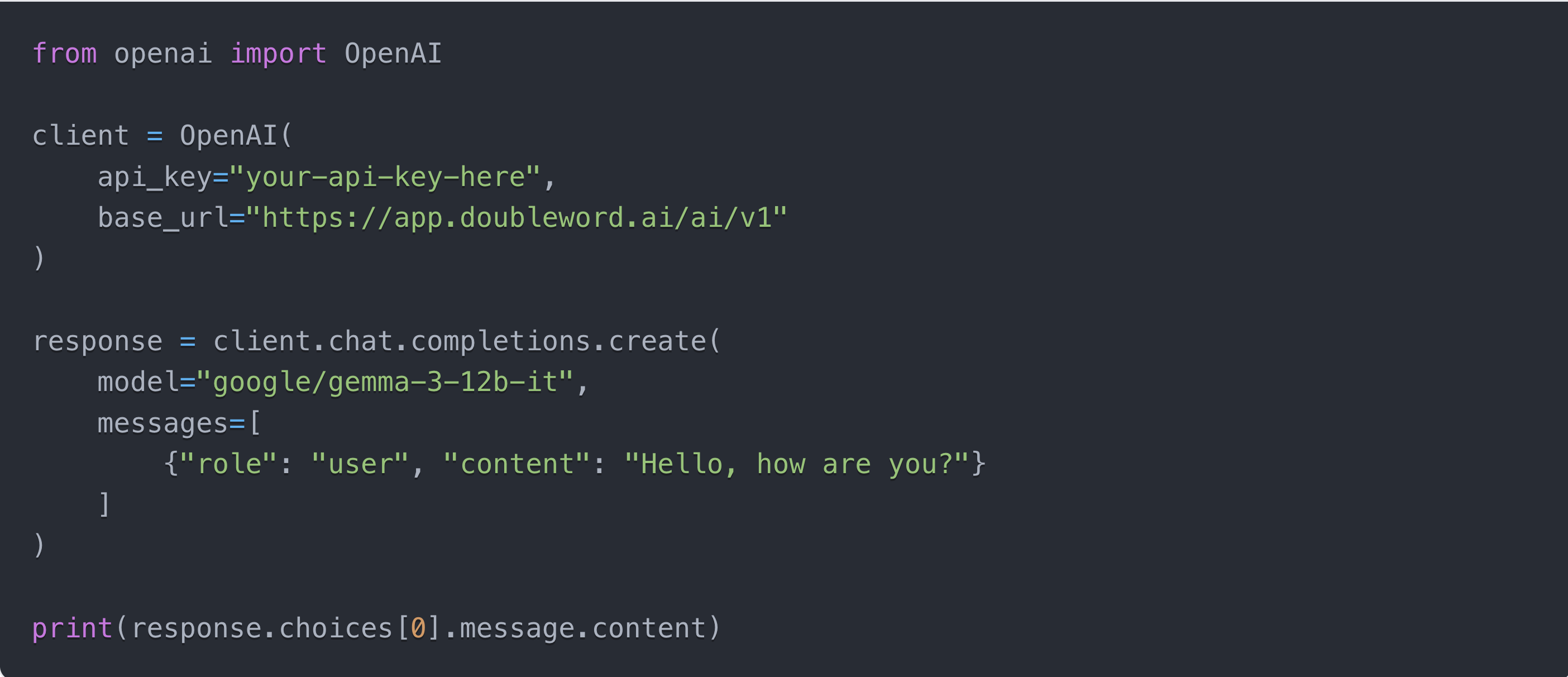

OpenAI-Compatible API

A single OpenAI-compatible gateway to all of your AI models across all your providers. Your existing code works without modification - point your applications to the Control Layer's /ai/ endpoints and supply a Control Layer API key.

Learn more about adding endpoints →

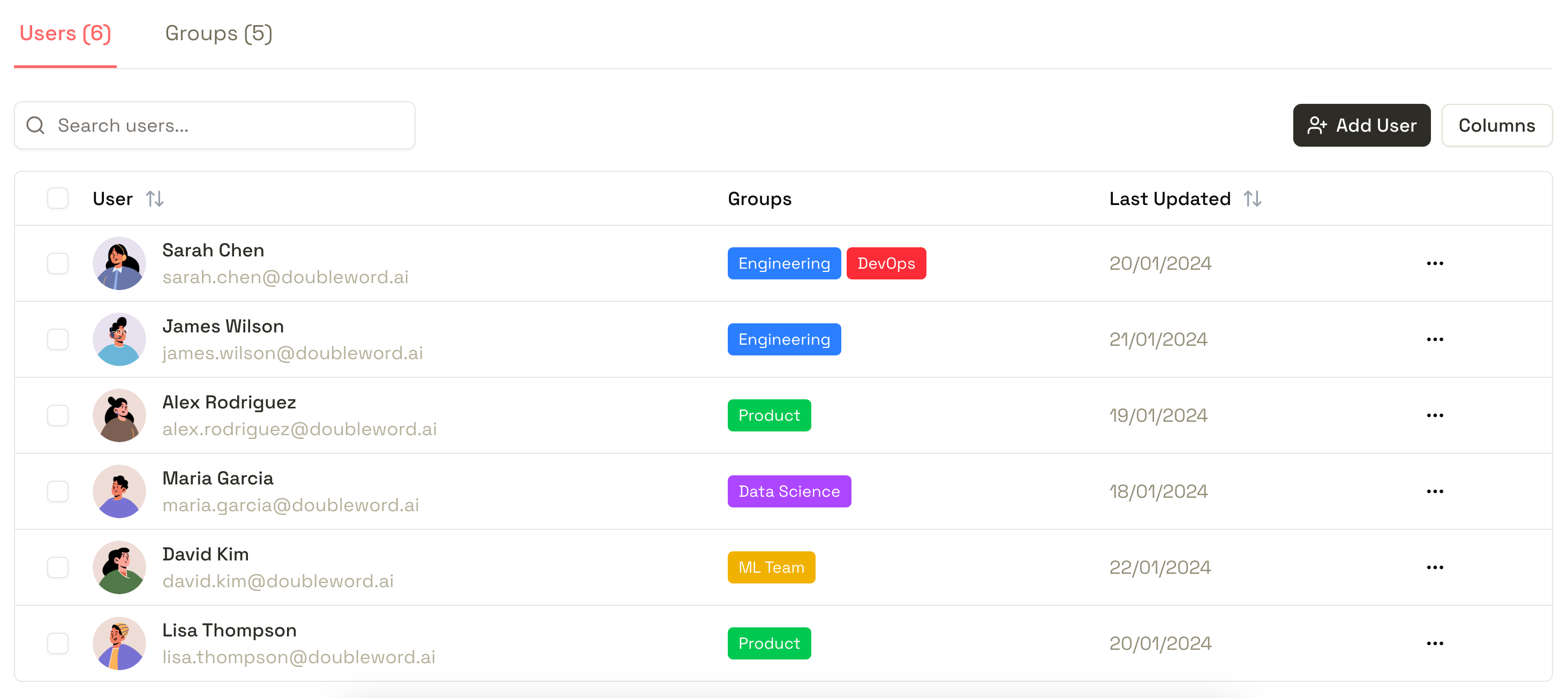

Centralized User Management & API Key Authentication

Manage all the users that can access your AI models from one interface. Map users to groups, let users create their own API keys, and monitor usage across your organization.

The Control Layer turns unauthenticated, self-hosted model deployments into production-ready services with user authentication and access control.

Learn more about Users & Groups →

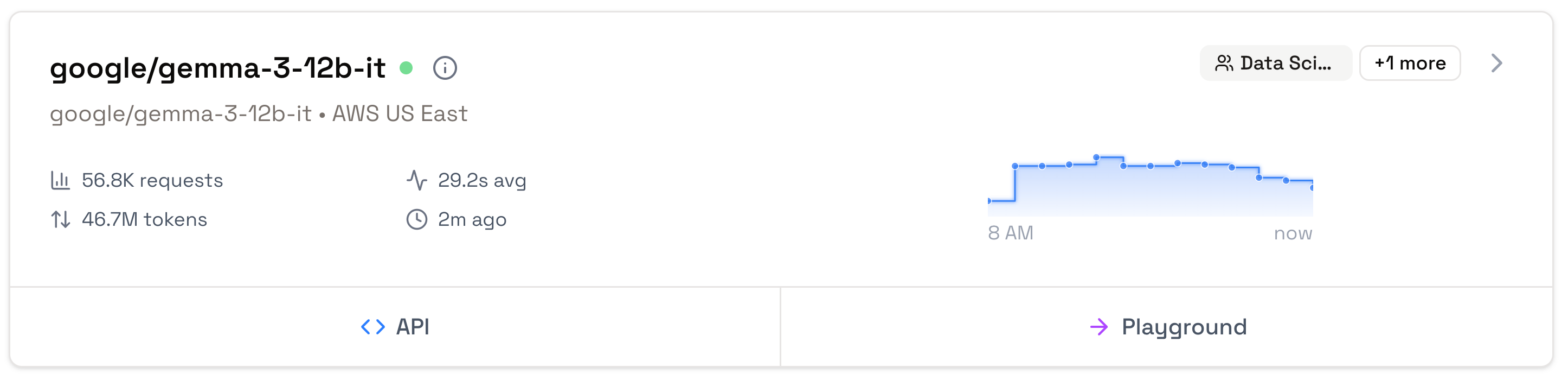

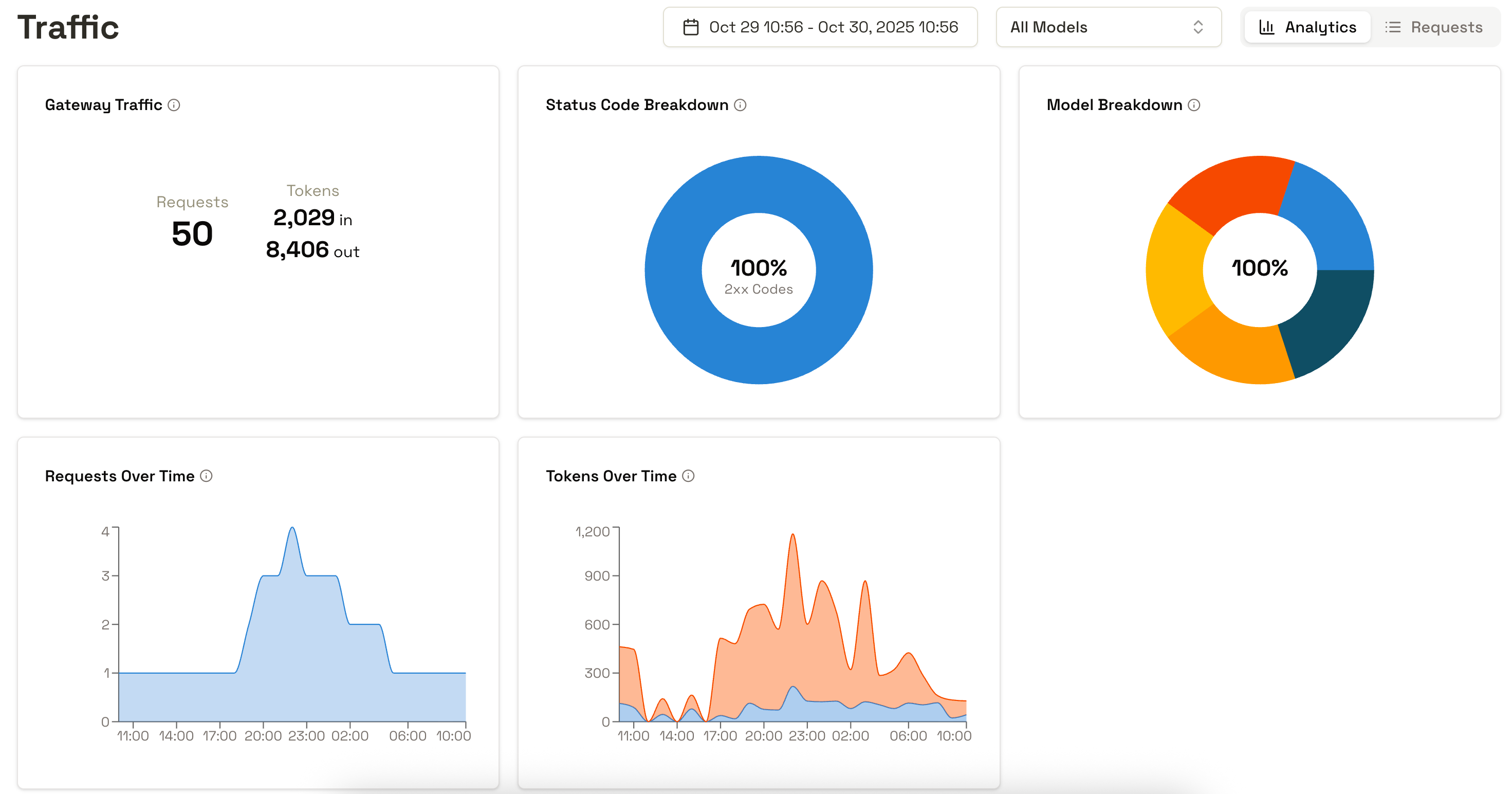

Real-Time Monitoring & Analytics

Track request volumes, token usage, response times, and model performance across your entire organization. Built-in analytics help you understand usage patterns, optimize costs, and identify bottlenecks.

Drill down into user behaviour across providers - with opinionated analytics for understanding which models are being used, by whom, and for what purposes.

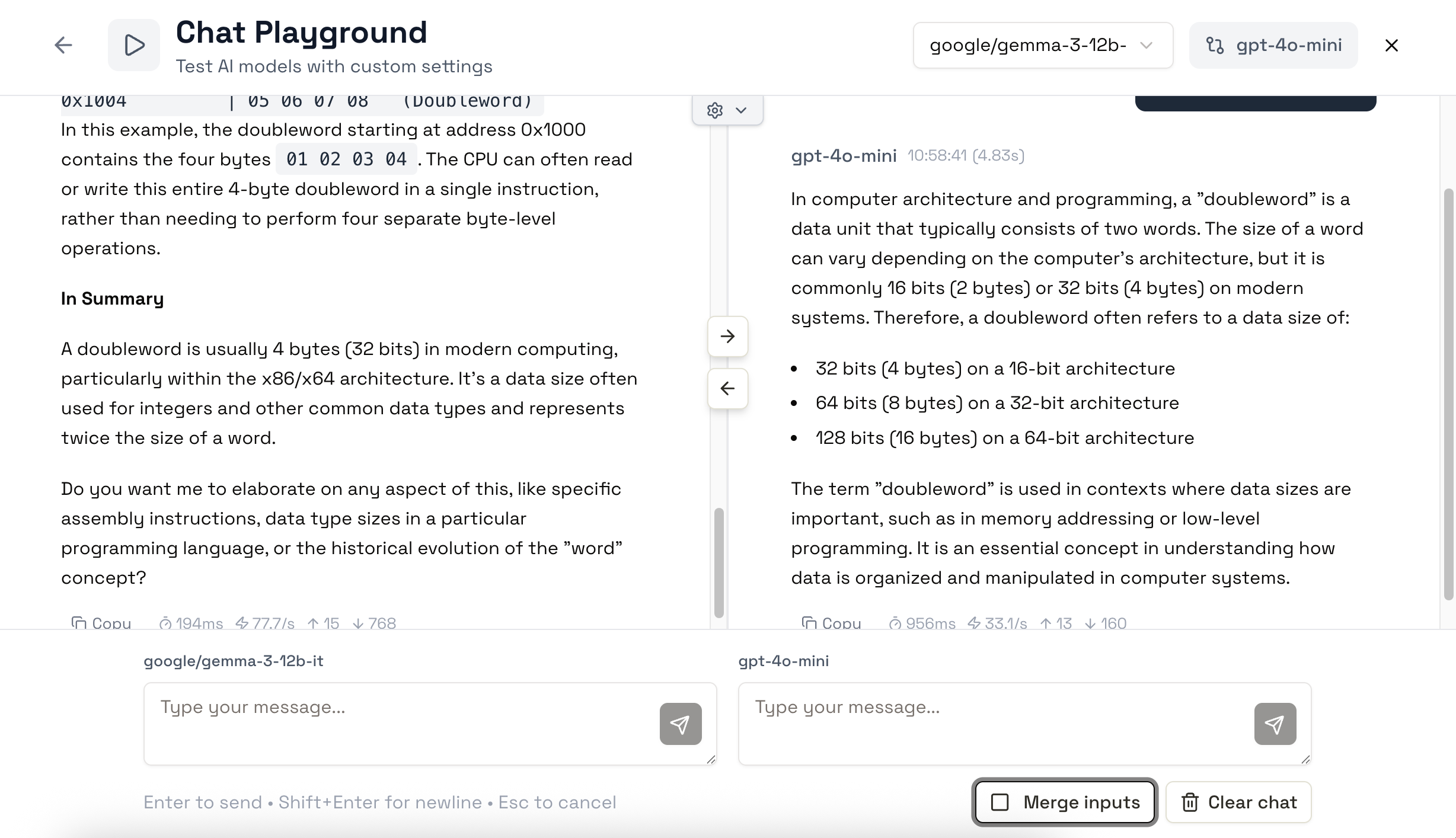

Interactive Playground

Test and compare generative, embedding, and reranker models side-by-side before integrating them into your applications.